At Uniqlo flagship store in Ginza, Tokyo, there was this T-shirt with an encoded shell script.

Well, I had to decode it and see the result.

I took a photo with my iPhone and used its own OCR, which made a lot of confusion between 0 (zero), O (capital o) and 8, mixed 1 and l (small L), and yielded many chars as very similar glyphs but in advanced Unicode ranges, which are invalid for Base64 encoding. It took me some time to fix it all. The final corrected text is this:

#!/bin/bash

eval "$(base64 -d <<< 'IyEvYmluL2Jhc2gKCiMgQ29uZ3Jhd

HVsYXRpb25zISBZb3UgZm91bmQgdGhlIGVhc3RlciBlZ2chIOKdpO+4jwojIOOBiuO

CgeOBp+OBqOOBhuOBlOOBluOBhOOBvuOBme+8gemaoOOBleOCjOOBn+OCteODl+OD

qeOCpOOCuuOCkuimi+OBpOOBkeOBvuOBl+OBn++8geKdpO+4jwoKIyBEZWZpbmUgd

GhlIHRleHQgdG8gYW5pbWF0ZQp0ZXh0PSLimaVQRUFDReKZpUZPUuKZpUFMTOKZpV

BFQUNF4pmlRk9S4pmlQUxM4pmlUEVBQ0XimaVGT1LimaVBTEzimaVQRUFDReKZpUZ

PUuKZpUFMTOKZpVBFQUNF4pmlRk9S4pmlQUxM4pmlIgoKIyBHZXQgdGVybWluYWwg

ZGltZW5zaW9ucwpjb2xzPSQodHB1dCBjb2xzKQpsaW5lcz0kKHRwdXQgbGluZXMpC

gojIENhbGN1bGF0ZSB0aGUgbGVuZ3RoIG9mIHRoZSB0ZXh0CnRleHRfbGVuZ3RoPS

R7I3RleHR9CgojIEhpZGUgdGhlIGN1cnNvcgp0cHV0IGNpdmlzCgojIFRyYXAgQ1RS

TCtDIHRvIHNob3cgdGhlIGN1cnNvciBiZWZvcmUgZXhpdGluZwp0cmFwICJ0cHV0I

GNub3JtOyBleGl0IiBTSUdJTlQKCiMgU2V0IGZyZXF1ZW5jeSBzY2FsaW5nIGZhY3R

vcgpmcmVxPTAuMgoKIyBJbmZpbml0ZSBsb29wIGZvciBjb250aW51b3VzIGFuaW1hd

Glvbgpmb3IgKCggdD0wOyA7IHQrPTEgKSk7IGRvCiAgICAjIEV4dHJhY3Qgb25lIGN

oYXJhY3RlciBhdCBhIHRpbWUKICAgIGNoYXI9IiR7dGV4dDp0ICUgdGV4dF9sZW5nd

Gg6MX0iCiAgICAKICAgICMgQ2FsY3VsYXRlIHRoZSBhbmdsZSBpbiByYWRpYW5zCiA

gICBhbmdsZT0kKGVjaG8gIigkdCkgKiAkZnJlcSIgfCBiYyAtbCkKCiAgICAjIENhb

GN1bGF0ZSB0aGUgc2luZSBvZiB0aGUgYW5nbGUKICAgIHNpbmVfdmFsdWU9JChlY2

hvICJzKCRhbmdsZSkiIHwgYmMgLWwpCgogICAgIyBDYWxjdWxhdGUgeCBwb3NpdGl

vbiB1c2luZyB0aGUgc2luZSB2YWx1ZQogICAgeD0kKGVjaG8gIigkY29scyAvIDIpIC

sgKCRjb2xzIC8gNCkgKiAkc2luZV92YWx1ZSIgfCBiYyAtbCkKICAgIHg9JChwcmlu

dGYgIiUuMGYiICIkeCIpCgogICAgIyBFbnN1cmUgeCBpcyB3aXRoaW4gdGVybWluY

WwgYm91bmRzCiAgICBpZiAoKCB4IDwgMCApKTsgdGhlbiB4PTA7IGZpCiAgICBpZi

AoKCB4ID49IGNvbHMgKSk7IHRoZW4geD0kKChjb2xzIC0gMSkpOyBmaQoKICAgICM

gQ2FsY3VsYXRlIGNvbG9yIGdyYWRpZW50IGJldHdlZW4gMTIgKGN5YW4pIGFuZCAyM

DggKG9yYW5nZSkKICAgIGNvbG9yX3N0YXJ0PTEyCiAgICBjb2xvcl9lbmQ9MjA4CiA

gICBjb2xvcl9yYW5nZT0kKChjb2xvcl9lbmQgLSBjb2xvcl9zdGFydCkpCiAgICBjb

2xvcj0kKChjb2xvcl9zdGFydCArIChjb2xvcl9yYW5nZSAqIHQgLyBsaW5lcykgJSBj

b2xvcl9yYW5nZSkpCgogICAgIyBQcmludCB0aGUgY2hhcmFjdGVyIHdpdGggMjU2L

WNvbG9yIHN1cHBvcnQKICAgIGVjaG8gLW5lICJcMDMzWzM4OzU7JHtjb2xvcn1tIiQ

odHB1dCBjdXAgJHQgJHgpIiRjaGFyXDAzM1swbSIKCiAgICAjIExpbmUgZmVlZCB0b

yBtb3ZlIGRvd253YXJkCiAgICBlY2hvICIiCgpkb25lCgo= ')"When base64-decoded, this bash script appears:

#!/bin/bash

# Congratulations! You found the easter egg! ❤️

# おめでとうございます!隠されたサプライズを見つけました!❤️

# Define the text to animate

text="♥PEACE♥FOR♥ALL♥PEACE♥FOR♥ALL♥PEACE♥FOR♥ALL♥PEACE♥FOR♥ALL♥PEACE♥FOR♥ALL♥"

# Get terminal dimensions

cols=$(tput cols)

lines=$(tput lines)

# Calculate the length of the text

text_length=${#text}

# Hide the cursor

tput civis

# Trap CTRL+C to show the cursor before exiting

trap "tput cnorm; exit" SIGINT

# Set frequency scaling factor

freq=0.2

# Infinite loop for continuous animation

for (( t=0; ; t+=1 )); do

# Extract one character at a time

char="${text:t % text_length:1}"

# Calculate the angle in radians

angle=$(echo "($t) * $freq" | bc -l)

# Calculate the sine of the angle

sine_value=$(echo "s($angle)" | bc -l)

# Calculate x position using the sine value

x=$(echo "($cols / 2) + ($cols / 4) * $sine_value" | bc -l)

x=$(printf "%.0f" "$x")

# Ensure x is within terminal bounds

if (( x < 0 )); then x=0; fi

if (( x >= cols )); then x=$((cols - 1)); fi

# Calculate color gradient between 12 (cyan) and 208 (orange)

color_start=12

color_end=208

color_range=$((color_end - color_start))

color=$((color_start + (color_range * t / lines) % color_range))

# Print the character with 256-color support

echo -ne "\033[38;5;${color}m"$(tput cup $t $x)"$char\033[0m"

# Line feed to move downward

echo ""

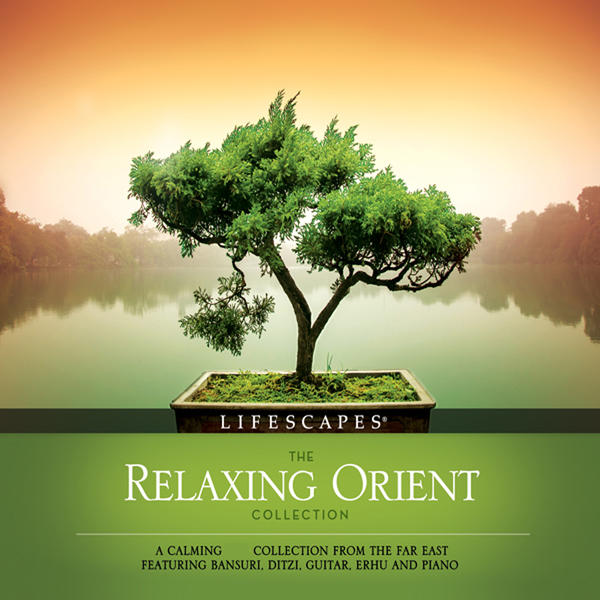

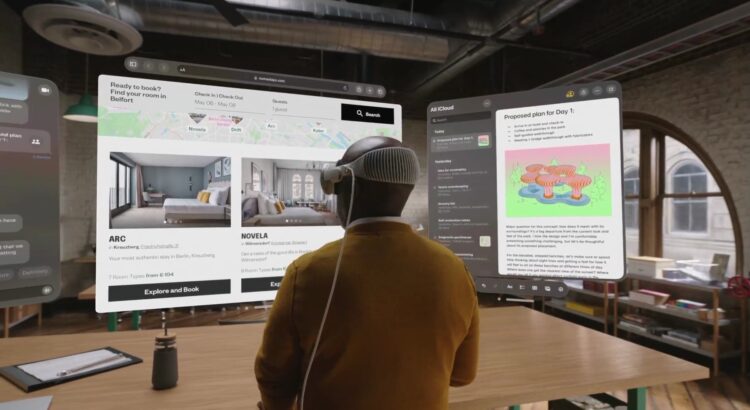

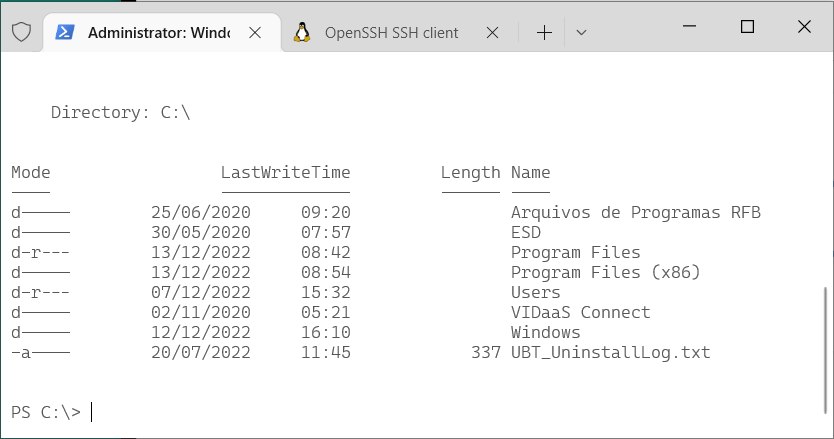

doneThe original encoded text, when executed in my Linux terminal gives a beatiful animation, similar to this:

After decoding and executing the script, I found other people doing the same:

- Uniqlo’s Peace For All Easter Egg | leewc

- GitHub – SeenamZaSodaSingha/UNIQLO_Akamai_TShirt_Base64 · GitHub

But I swear I decoded it myself first, without any help.