There was a time that Apple macOS was the best platform to handle multimedia (audio, image, video). This might be still true in the GUI space. But Linux presents a much wider range of possibilities when you go to the command line, specially if you want to:

- Process hundreds or thousands of files at once

- Same as above, organized in many folders while keeping the folder structure

- Same as above but with much fine grained options, including lossless processing, pixel perfectness that most GUI tools won’t give you

The Open Source community has produced state of the art command line tools as ffmpeg, exiftool and others, which I use every day to do non-trivial things, along with Shell advanced scripting. Sure, you can get these tools installed on Mac or Windows, and you can even use almost all these recipes on these platforms, but Linux is the native platform for these tools, and easier to get the environment ready.

These are my personal notes and I encourage you to understand each step of the recipes and adapt to your workflows. It is organized in Audio, Video and Image+Photo sections.

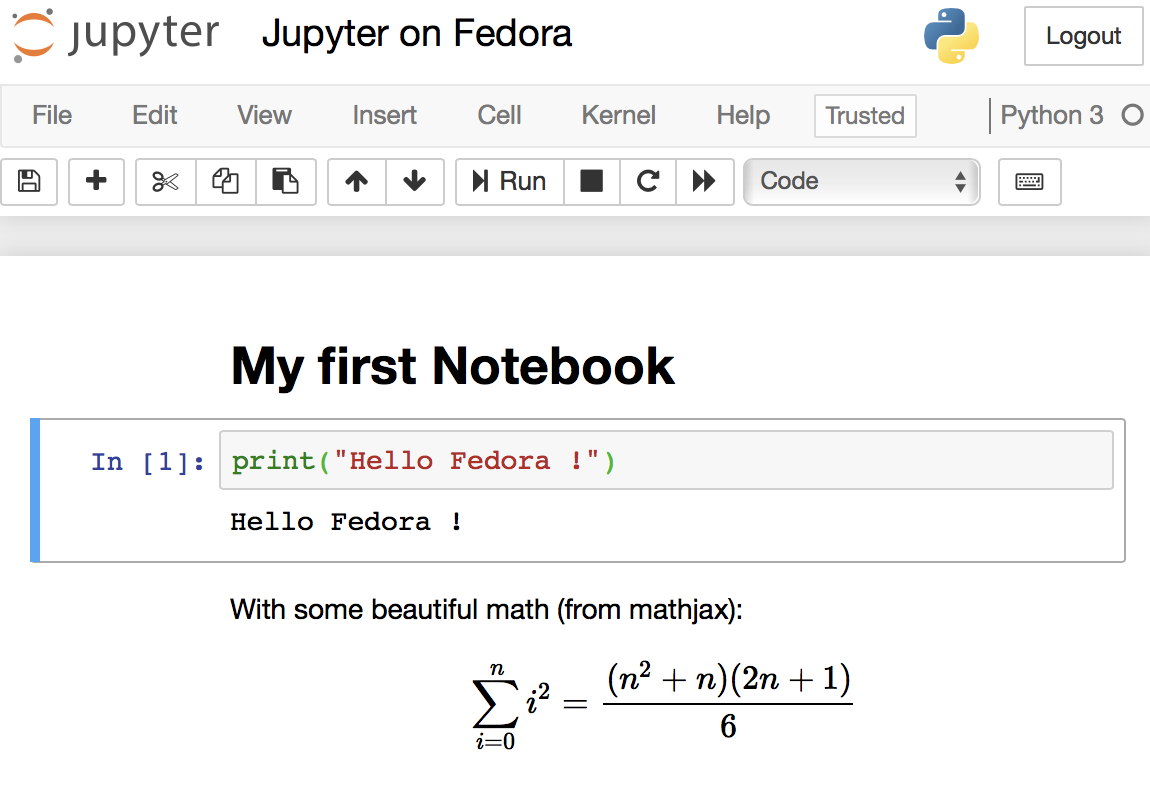

I use Fedora Linux and I mention Fedora package names to be installed. You can easily find same packages on your Ubuntu, Debian, Gentoo etc, and use these same recipes.

Show information (tags, bitrate etc) about a multimedia file

ffprobe file.mp3

ffprobe file.m4v

ffprobe file.mkv

Lossless conversion of all FLAC files into more compatible, but still Open Source, ALAC

ls *flac | while read f; do

ffmpeg -i "$f" -acodec alac -vn "${f[@]/%flac/m4a}" < /dev/null;

done

Convert all FLAC files into 192kbps MP3

ls *flac | while read f; do

ffmpeg -i "$f" -qscale:a 2 -vn "${f[@]/%flac/mp3}" < /dev/null;

done

Convert all FLAC files into ~256kbps VBR AAC with Fraunhofer AAC encoder

First, make sure you have Negativo17 build of FFMPEG, so run this as root:

dnf config-manager --add-repo=http://negativo17.org/repos/fedora-multimedia.repo

dnf update ffmpeg

Now encode:

ls *flac | while read f; do

ffmpeg -i "$f" -vn -c:a libfdk_aac -vbr 5 -movflags +faststart "${f[@]/%flac/m4a}" < /dev/null;

done

Has been said the Fraunhofer AAC library can’t be legally linked to ffmpeg due to license terms violation. In addition, ffmpeg’s default AAC encoder has been improved and is almost as good as Fraunhofer’s, specially for constant bit rate compression. In this case, this is the command:

ls *flac | while read f; do

ffmpeg -i "$f" -vn -c:a aac -b:a 256k -movflags +faststart "${f[@]/%flac/m4a}" < /dev/null;

done

Same as above but under a complex directory structure

This is one of my favorites, extremely powerful. Very useful when you get a Hi-Fi, complete but useless WMA-Lossless collection and need to convert it losslesslly to something more portable, ALAC in this case. Change the FMT=flac to FMT=wav or FMT=wma (only when it is WMA-Lossless) to match your source files. Don’t forget to tag the generated files.

FMT=flac

# Create identical directory structure under new "alac" folder

find . -type d | while read d; do

mkdir -p "alac/$d"

done

find . -name "*$FMT" | sort | while read f; do

ffmpeg -i "$f" -acodec alac -vn "alac/${f[@]/%$FMT/m4a}" < /dev/null;

mp4tags -E "Deezer lossless files (https://github.com/Ghostfly/deezDL) + 'ffmpeg -acodec alac'" "alac/${f[@]/%$FMT/m4a}";

done

Embed lyrics into M4A files

iPhone and iPod music player can display the file’s embedded lyrics and this is a cool feature. There are several ways to get lyrics into your music files. If you download music from Deezer using SMLoadr, you’ll get files with embedded lyrics. Then, the FLAC to ALAC process above will correctly transport the lyrics to the M4A container. Another method is to use beets music tagger and one of its plugins, though it is very slow for beets to fetch lyrics of entire albums from the Internet.

The third method is manual. Let lyrics.txt be a text file with your lyrics. To tag it into your music.m4a, just do this:

mp4tags -L "$(cat lyrics.txt)" music.m4a

And then check to see the embedded lyrics:

ffprobe music.m4a 2>&1 | less

Convert APE+CUE, FLAC+CUE, WAV+CUE album-on-a-file into a one file per track ALAC or MP3

If some of your friends has the horrible tendency to commit this crime and rip CDs as 1 file for entire CD, there is an automation to fix it. APE is the most difficult and this is what I’ll show. FLAC and WAV are shortcuts of this method.

- Make a lossless conversion of the APE file into something more manageable, as WAV:

ffmpeg -i audio-cd.ape audio-cd.wav

- Now the magic: use the metadata on the CUE file to split the single file into separate tracks, renaming them accordingly. You’ll need the shnplit command, available in the shntool package on Fedora (to install: yum install shntool). Additionally, CUE files usually use ISO-8859-1 (Latin1) charset and a conversion to Unicode (UTF-8) is required:

iconv -f Latin1 -t UTF-8 audio-cd.cue | shnsplit -t "%n · %p ♫ %t" audio-cd.wav

- Now you have a series of nicely named WAV files, one per CD track. Lets convert them into lossless ALAC using one of the above recipes:

ls *wav | while read f; do

ffmpeg -i "$f" -acodec alac -vn "${f[@]/%wav/m4a}" < /dev/null;

done

This will get you lossless ALAC files converted from the intermediary WAV files. You can also convert them into FLAC or MP3 using variations of the above recipes.

Now the files are ready for your tagger.

Add chapters and soft subtitles from SRT file to M4V/MP4 movie

This is a lossless and fast process, chapters and subtitles are added as tags and streams to the file; audio and video streams are not reencoded.

- Make sure your SRT file is UTF-8 encoded:

bash$ file subtitles_file.srt

subtitles_file.srt: ISO-8859 text, with CRLF line terminators

It is not UTF-8 encoded, it is some ISO-8859 variant, which I need to know to correctly convert it. My example uses a Brazilian Portuguese subtitle file, which I know is ISO-8859-15 (latin1) encoded because most latin scripts use this encoding.

- Lets convert it to UTF-8:

bash$ iconv -f latin1 -t utf8 subtitles_file.srt > subtitles_file_utf8.srt

bash$ file subtitles_file_utf8.srt

subtitles_file_utf8.srt: UTF-8 Unicode text, with CRLF line terminators

- Check chapters file:

bash$ cat chapters.txt

CHAPTER01=00:00:00.000

CHAPTER01NAME=Chapter 1

CHAPTER02=00:04:31.605

CHAPTER02NAME=Chapter 2

CHAPTER03=00:12:52.063

CHAPTER03NAME=Chapter 3

…

- Now we are ready to add them all to the movie along with setting the movie name and embedding a cover image to ensure the movie looks nice on your media player list of content. Note that this process will write the movie file in place, will not create another file, so make a backup of your movie while you are learning:

MP4Box -ipod \

-itags 'track=The Movie Name:cover=cover.jpg' \

-add 'subtitles_file_utf8.srt:lang=por' \

-chap 'chapters.txt:lang=eng' \

movie.mp4

The MP4Box command is part of GPac.

OpenSubtitles.org has a large collection of subtitles in many languages and you can search its database with the IMDB ID of the movie. And ChapterDB has the same for chapters files.

Decrypt and rip a DVD the loss less way

- Make sure you have the RPMFusion and the Negativo17 repos configured

- Install libdvdcss and vobcopy

dnf -y install libdvdcss vobcopy

- Mount the DVD and rip it, has to be done as root

mount /dev/sr0 /mnt/dvd;

cd /target/folder;

vobcopy -m /mnt/dvd .

You’ll get a directory tree with decrypted VOB and BUP files. You can generate an ISO file from them or, much more practical, use HandBrake to convert the DVD titles into MP4/M4V (more compatible with wide range of devices) or MKV/WEBM files.

Convert 240fps video into 30fps slow motion, the loss-less way

Modern iPhones can record videos at 240 or 120fps so when you’ll watch them at 30fps they’ll look slow-motion. But regular players will play them at 240 or 120fps, hiding the slo-mo effect.

We’ll need to handle audio and video in different ways. The video FPS fix from 240 to 30 is loss less, the audio stretching is lossy.

# make sure you have the right packages installed

dnf install mkvtoolnix sox gpac faac

#!/bin/bash

# Script by Avi Alkalay

# Freely distributable

f="$1"

ofps=30

noext=${f%.*}

ext=${f##*.}

# Get original video frame rate

ifps=`ffprobe -v error -select_streams v:0 -show_entries stream=r_frame_rate -of default=noprint_wrappers=1:nokey=1 "$f" < /dev/null | sed -e 's|/1||'`

echo

# exit if not high frame rate

[[ "$ifps" -ne 120 ]] && [[ "$ifps" -ne 240 ]] && exit

fpsRate=$((ifps/ofps))

fpsRateInv=`awk "BEGIN {print $ofps/$ifps}"`

# loss less video conversion into 30fps through repackaging into MKV

mkvmerge -d 0 -A -S -T \

--default-duration 0:${ofps}fps \

"$f" -o "v$noext.mkv"

# loss less repack from MKV to MP4

ffmpeg -loglevel quiet -i "v$noext.mkv" -vcodec copy "v$noext.mp4"

echo

# extract subtitles, if original movie has it

ffmpeg -loglevel quiet -i "$f" "s$noext.srt"

echo

# resync subtitles using similar method with mkvmerge

mkvmerge --sync "0:0,${fpsRate}" "s$noext.srt" -o "s$noext.mkv"

# get simple synced SRT file

rm "s$noext.srt"

ffmpeg -i "s$noext.mkv" "s$noext.srt"

# remove undesired formating from subtitles

sed -i -e 's|<font size="8"><font face="Helvetica">\(.*\)</font></font>|\1|' "s$noext.srt"

# extract audio to WAV format

ffmpeg -loglevel quiet -i "$f" "$noext.wav"

# make audio longer based on ratio of input and output framerates

sox "$noext.wav" "a$noext.wav" speed $fpsRateInv

# lossy stretched audio conversion back into AAC (M4A) 64kbps (because we know the original audio was mono 64kbps)

faac -q 200 -w -s --artist a "a$noext.wav"

# repack stretched audio and video into original file while removing the original audio and video tracks

cp "$f" "${noext}-slow.${ext}"

MP4Box -ipod -rem 1 -rem 2 -rem 3 -add "v$noext.mp4" -add "a$noext.m4a" -add "s$noext.srt" "${noext}-slow.${ext}"

# remove temporary files

rm -f "$noext.wav" "a$noext.wav" "v$noext.mkv" "v$noext.mp4" "a$noext.m4a" "s$noext.srt" "s$noext.mkv"

1 Photo + 1 Song = 1 Movie

If the audio is already AAC-encoded (may also be ALAC-encoded), create an MP4/M4V file:

ffmpeg -loop 1 -framerate 0.2 -i photo.jpg -i song.m4a -shortest -c:v libx264 -tune stillimage -vf scale=960:-1 -c:a copy movie.m4v

The above method will create a very efficient 0.2 frames per second (-framerate 0.2) H.264 video from the photo while simply adding the audio losslessly. Such very-low-frames-per-second video may present sync problems with subtitles on some players. In this case simply remove the -framerate 0.2 parameter to get a regular 25fps video with the cost of a bigger file size.

The -vf scale=960:-1 parameter tells FFMPEG to resize the image to 960px width and calculate the proportional height. Remove it in case you want a video with the same resolution of the photo. A 12 megapixels photo file (around 4032×3024) will get you a near 4K video.

If the audio is MP3, create an MKV file:

ffmpeg -loop 1 -framerate 0.2 -i photo.jpg -i song.mp3 -shortest -c:v libx264 -tune stillimage -vf scale=960:-1 -c:a copy movie.mkv

If audio is not AAC/M4A but you still want an M4V file, convert audio to AAC 192kbps:

ffmpeg -loop 1 -framerate 0.2 -i photo.jpg -i song.mp3 -shortest -c:v libx264 -tune stillimage -vf scale=960:-1 -c:a aac -strict experimental -b:a 192k movie.m4v

See more about FFMPEG photo resizing.

There is also a more efficient and completely lossless way to turn a photo into a video with audio, using extended podcast techniques. But thats much more complicated and requires advanced use of GPAC’s MP4Box and NHML. In case you are curious, see the Podcast::chapterize() and Podcast::imagify() methods in my music-podcaster script. The trick is to create an NHML (XML) file referencing the image(s) and add it as a track to the M4A audio file.

Move images with no EXIF header to another folder

mkdir noexif;

exiftool -filename -T -if '(not $datetimeoriginal or ($datetimeoriginal eq "0000:00:00 00:00:00"))' *HEIC *JPG *jpg | while read f; do mv "$f" noexif/; done

Set EXIF photo create time based on file create time

Warning: use this only if image files have correct creation time on filesystem and if they don’t have an EXIF header.

exiftool -overwrite_original '-DateTimeOriginal< ${FileModifyDate}' *CR2 *JPG *jpg

Rotate photos based on EXIF’s Orientation flag, plus make them progressive. Lossless

jhead -autorot -cmd "jpegtran -progressive '&i' > '&o'" -ft *jpg

Rename photos to a more meaningful filename

This process will rename silly, sequential, confusing and meaningless photo file names as they come from your camera into a readable, sorteable and useful format. Example:

IMG_1234.JPG ➡ 2015.07.24-17.21.33 • Max playing with water【iPhone 6s✚】.jpg

Note that new file name has the date and time it was taken, whats in the photo and the camera model that was used.

- First keep the original filename, as it came from the camera, in the OriginalFileName tag:

exiftool -overwrite_original '-OriginalFileName<${filename}' *CR2 *JPG *jpg

- Now rename:

exiftool '-filename<${DateTimeOriginal} 【${Model}】%.c.%e' -d %Y.%m.%d-%H.%M.%S *CR2 *HEIC *JPG *jpg

- Remove the ‘0’ index if not necessary:

\ls *HEIC *JPG *jpg *heic | while read f; do

nf=`echo "$f" | sed -e 's/0.JPG/.jpg/i; s/0.HEIC/.heic/i'`;

t=`echo "$f" | sed -e 's/0.JPG/1.jpg/i; s/0.HEIC/1.heic/i'`;

[[ ! -f "$t" ]] && mv "$f" "$nf";

done

Alternative for macOS without SED:

\ls *HEIC *JPG *jpg *heic | perl -e '

while (<>) {

chop; $nf=$_; $t=$_;

$nf=~s/0.JPG/.jpg/i; $nf=~s/0.HEIC/.heic/i;

$t=~s/0.JPG/1.jpg/i; $t=~s/0.HEIC/1.heic/i;

rename($_,$nf) if (! -e $t);

}'

- Optional: make lower case extensions:

\ls *HEIC *JPG | while read f; do

nf=`echo "$f" | sed -e 's/JPG/jpg/; s/HEIC/heic/'`;

mv "$f" "$nf";

done

- Optional: simplify camera name, for example turn “Canon PowerShot G1 X” into “Canon G1X” and make lower case extension at the same time:

\ls *HEIC *JPG *jpg *heic | while read f; do

nf=`echo "$f" | sed -e 's/Canon PowerShot G1 X/Canon G1X/;

s/iPhone 6s Plus/iPhone 6s✚/;

s/iPhone 7 Plus/iPhone 7✚/;

s/Canon PowerShot SD990 IS/Canon SD990 IS/;

s/HEIC/heic/;

s/JPG/jpg/;'`;

mv "$f" "$nf";

done

You’ll get file names as 2015.07.24-17.21.33 【Canon 5D Mark II】.jpg. If you took more then 1 photo in the same second, exiftool will automatically add an index before the extension.

Even more semantic photo file names based on Subject tag

\ls *【*】* | while read f; do

s=`exiftool -T -Subject "$f"`;

if [[ " $s" != " -" ]]; then

nf=`echo "$f" | sed -e "s/ 【/ • $s 【/; s/\:/∶/g;"`;

mv "$f" "$nf";

fi;

done

Full rename: a consolidation of some of the previous commands

exiftool '-filename<${DateTimeOriginal} • ${Subject} 【${Model}】%.c.%e' -d %Y.%m.%d-%H.%M.%S *CR2 *JPG *HEIC *jpg *heic

Set photo “Creator” tag based on camera model

- First list all cameras that contributed photos to current directory:

exiftool -T -Model *jpg | sort -u

Output is the list of camera models on this photos:

Canon EOS REBEL T5i

DSC-H100

iPhone 4

iPhone 4S

iPhone 5

iPhone 6

iPhone 6s Plus

- Now set creator on photo files based on what you know about camera owners:

CRE="John Doe"; exiftool -overwrite_original -creator="$CRE" -by-line="$CRE" -Artist="$CRE" -if '$Model=~/DSC-H100/' *.jpg

CRE="Jane Black"; exiftool -overwrite_original -creator="$CRE" -by-line="$CRE" -Artist="$CRE" -if '$Model=~/Canon EOS REBEL T5i/' *.jpg

CRE="Mary Doe"; exiftool -overwrite_original -creator="$CRE" -by-line="$CRE" -Artist="$CRE" -if '$Model=~/iPhone 5/' *.jpg

CRE="Peter Black"; exiftool -overwrite_original -creator="$CRE" -by-line="$CRE" -Artist="$CRE" -if '$Model=~/iPhone 4S/' *.jpg

CRE="Avi Alkalay"; exiftool -overwrite_original -creator="$CRE" -by-line="$CRE" -Artist="$CRE" -if '$Model=~/iPhone 6s Plus/' *.jpg

Recursively search people in photos

If you geometrically mark people faces and their names in your photos using tools as Picasa, you can easily search for the photos which contain “Suzan” or “Marcelo” this way:

exiftool -fast -r -T -Directory -FileName -RegionName -if '$RegionName=~/Suzan|Marcelo/' .

-Directory, -FileName and -RegionName specify the things you want to see in the output. You can remove -RegionName for a cleaner output.

The -r is to search recursively. This is pretty powerful.

Make photos timezone-aware

Your camera will tag your photos only with local time on CreateDate or DateTimeOriginal tags. There is another set of tags called GPSDateStamp and GPSTimeStamp that must contain the UTC time the photos were taken, but your camera won’t help you here. Hopefully you can derive these values if you know the timezone the photos were taken. Here are two examples, one for photos taken in timezone -02:00 (Brazil daylight savings time) and on timezone +09:00 (Japan):

exiftool -overwrite_original '-gpsdatestamp<${CreateDate}-02:00' '-gpstimestamp<${CreateDate}-02:00' '-TimeZone<-02:00' '-TimeZoneCity<São Paulo' *.jpg

exiftool -overwrite_original '-gpsdatestamp<${CreateDate}+09:00' '-gpstimestamp<${CreateDate}+09:00' '-TimeZone<+09:00' '-TimeZoneCity<Tokio' Japan_Photos_folder

Use exiftool to check results on a modified photo:

exiftool -s -G -time:all -gps:all 2013.10.12-23.45.36-139.jpg

[EXIF] CreateDate : 2013:10:12 23:45:36

[Composite] GPSDateTime : 2013:10:13 01:45:36Z

[EXIF] GPSDateStamp : 2013:10:13

[EXIF] GPSTimeStamp : 01:45:36

This shows that the local time when the photo was taken was 2013:10:12 23:45:36. To use exiftool to set timezone to -02:00 actually means to find the correct UTC time, which can be seen on GPSDateTime as 2013:10:13 01:45:36Z. The difference between these two tags gives us the timezone. So we can read photo time as 2013:10:12 23:45:36-02:00.

Geotag photos based on time and Moves mobile app records

Moves is an amazing app for your smartphone that simply records for yourself (not social and not shared) everywhere you go and all places visited, 24h a day.

- Make sure all photos’ CreateDate or DateTimeOriginal tags are correct and precise, achieve this simply by setting correctly the camera clock before taking the pictures.

- Login and export your Moves history.

- Geotag the photos informing ExifTool the timezone they were taken, -08:00 (Las Vegas) in this example:

exiftool -overwrite_original -api GeoMaxExtSecs=86400 -geotag ../moves_export/gpx/yearly/storyline/storyline_2015.gpx '-geotime<${CreateDate}-08:00' Folder_with_photos_from_trip_to_Las_Vegas

Some important notes:

- It is important to put the entire ‘-geotime’ parameter inside simple apostrophe or simple quotation mark (‘), as I did in the example.

- The ‘-geotime’ parameter is needed even if image files are timezone-aware (as per previous tutorial).

- The ‘-api GeoMaxExtSecs=86400’ parameter should not be used unless the photo was taken more than 90 minutes of any detected movement by the GPS.

Concatenate all images together in one big image

- In 1 column and 8 lines:

montage -mode concatenate -tile 1x8 *jpg COMPOSED.JPG

- In 8 columns and 1 line:

montage -mode concatenate -tile 8x1 *jpg COMPOSED.JPG

- In a 4×2 matrix:

montage -mode concatenate -tile 4x2 *jpg COMPOSED.JPG

The montage command is part of the ImageMagick package.

On December 3 Apple has open sourced the Swift programming language on

On December 3 Apple has open sourced the Swift programming language on

At the

At the